Fooled by the fakes: Cognitive differences in perceived claim accuracy and sharing intention of non-political deepfakes

Abstract

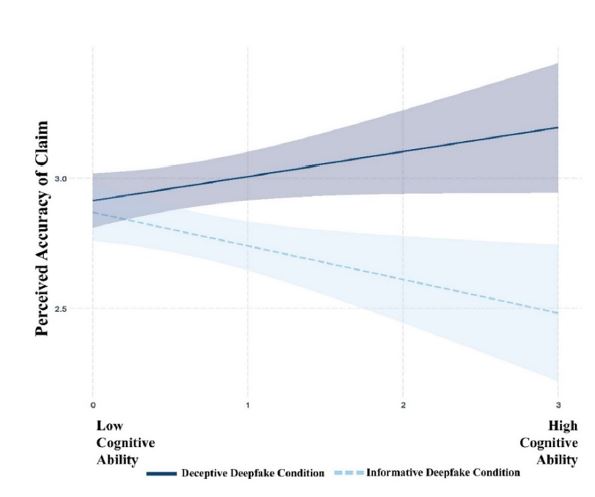

We examine how individual differences influence perceived accuracy of deepfake claims and sharing intention. Rather than political deepfakes, we use a non-political deepfake of a social media influencer as the stimulus, with an educational and a deceptive condition. We find that individuals are more likely to perceive the deepfake claim to be true when informative cues are missing along with the deepfake (compared to when they are present). Also, individuals are more likely to share deepfakes when they consider the fabricated claim to be accurate. Moreover, we find that cognitive ability plays a moderating role such that when informative cues are present (educational condition), individuals with high cognitive ability are less trustful of deepfake claims. Unexpectedly, when the informative cues are missing (deceptive condition), these individuals are more likely to consider the claim to be true and share them. The findings suggest that adding corrective labels can help reduce inadvertent sharing of disinformation. Also, user biases should be considered in understanding public engagement with disinformation.